With Advanced Micro Devices Inc. making its big push into the AI data-center market at its event in San Francisco this week, following Nvidia Corp.’s similar event in late March, one analyst sees both companies launching into a tighter-than-expected market.

The growth of artificial-intelligence products, which rely on massive data centers run by cloud-service providers or “hyperscalers,” has figured highly into the performance of both Nvidia

NVDA,

and AMD

AMD,

this year. Nvidia shares are up 187% year to date — giving the company its first $1 trillion valuation at the close on Tuesday — while AMD’s are up 95%. In comparison, the S&P 500

SPX,

is up 14% and the PHLX Semiconductor Index

SOX,

is up 46%.

While AI has turbocharged chip stocks and has built expectations for increased spending in the second half of 2023 from hyperscalers, BofA Securities analyst Vivek Arya said in a note released Wednesday that expectations may have overshot the mark.

Arya, who prefers Nvidia with a buy rating — versus his neutral rating for AMD and underperform rating for Intel Corp.

INTC,

— said his latest survey shows total capital expenditures at cloud-service providers growing 2.5% to $171.5 billion, rather than his previous estimate of 4.7% growth to $174.5 billion.

That’s taking into account a slowing of growth in China, however. China spending is expected to decline 10% to $8.5 billion. Stripping out China, U.S. capex growth is expected to rise 3.2% to $163 billion, versus a 2% gain in 2022.

Read: AMD launches new data-center AI chips, software to go up against Nvidia and Intel

AMD’s and Nvidia’s customers include major hyperscalers such as Amazon.com Inc.’s

AMZN,

AWS, Microsoft Corp.’s

MSFT,

Azure, Alphabet Inc.’s

GOOGL,

GOOG,

Google Cloud and Oracle Corp.’s

ORCL,

Cloud Infrastructure. Meanwhile, Amazon also offers free machine-learning tools for users; Microsoft backs one of the biggest names in generative AI, OpenAI and its ChatGPT product; and Google has its generative-AI product Bard and Google Cloud enterprise offerings.

And having both the AI and the infrastructure on which it runs appears to have a multiplier effect. Recently, Susquehanna Financial analyst Christopher Rolland pointed out that the number of Microsoft Azure OpenAI customers had soared by 10 times quarter over quarter and that Microsoft is “increasing capex sequentially to scale their AI infrastructure.”

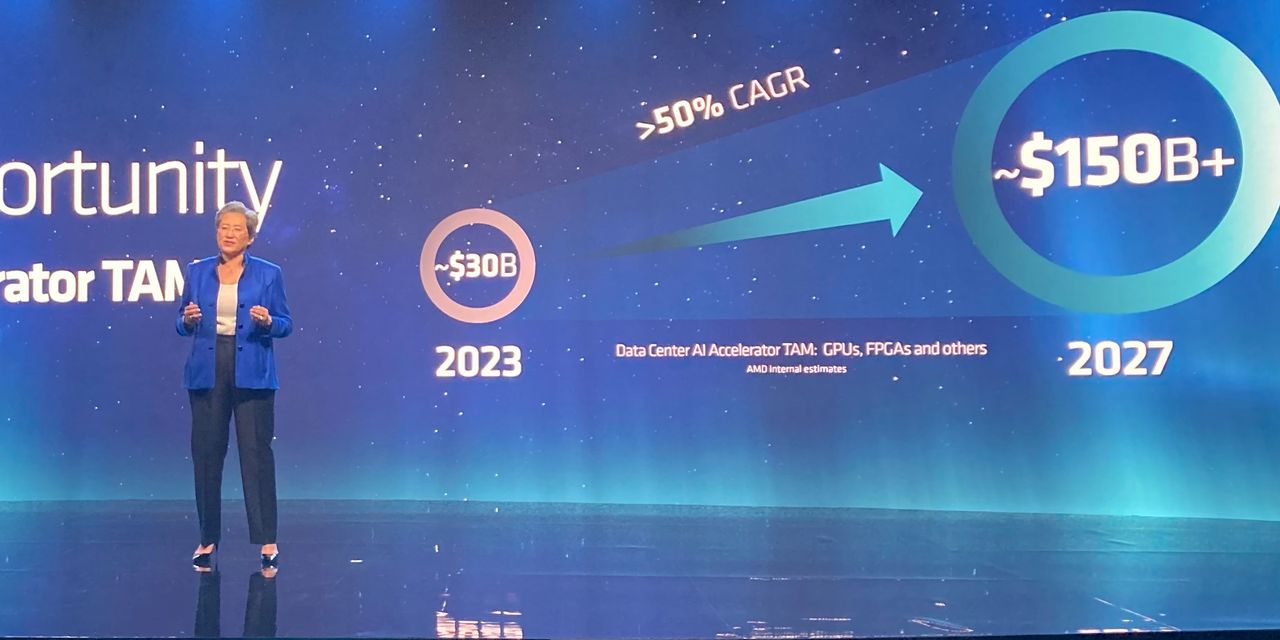

At AMD’s data-center event on Tuesday, Chair and Chief Executive Lisa Su said she sees the total addressable market for data-center AI accelerators to soar to more than $150 billion in 2027, from a current $30 billion. On Tuesday, AMD launched a slew of new AI data-center chips along with its Instinct MI300X accelerator and its Bergamo cloud-native Epyc CPU, which Meta Platforms Inc.

META,

has recently adopted.

Read: AMD is chasing down Nvidia in AI, but one analyst worries the company is ‘somewhat late’

Still, as the hyperscaler market matures and AI grows, BofA’s Arya is concerned that chip makers are going to have to carve their positions into tight budgets.

“The dilemma is how can slowing capex absorb accelerating growth in compute/capex-intensive generative AI (genAI) and large language model (LLM) based applications?” Arya said. “Indeed, accelerated servers containing several GPUs could be 10x+ the price of a traditional CPU-only server, with accelerator TAM (total addressable market) expected to surge to $100-$150 [billion] by CY27E, potentially 25-50% of cloud capex, which seems unsustainable.”

Read: Nvidia CEO expects AI revenue to grow from ‘tiny, tiny, tiny’ to ‘quite large’ in the next 12 months

The missing pieces of the dilemma here could be the ability of cloud-service providers to “extend the upgrade cycle of conventional CPUs, possible now that depreciation schedules are stretched” to between five and six years, from the usual three to four.

Arya also noted another piece could be that different CSPs have different capital allocations, with Amazon and Microsoft spending only about 35% to 40% of capex on hardware, while Google and Meta spend closer to 60%.

The analyst also noted that certain accelerated architectures could provide energy and operating expenditure savings.

Read the full article here